FriendliAI Presents New Serving System ‘Orca’ for Large-scale AI Models at OSDI 2022

The New System reduces the costs of using large-scale generative models to make it affordable for a wide range of users

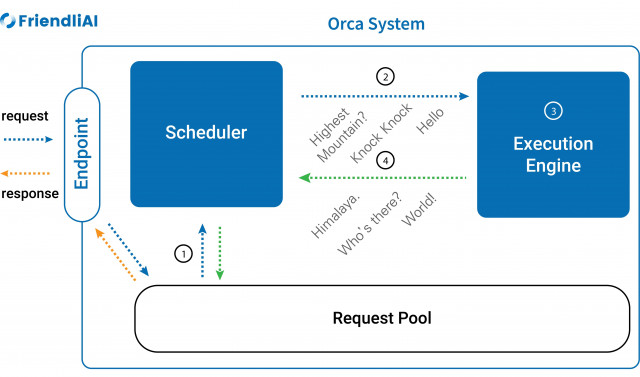

FriendliAI's Orca is a serving system that enables the efficient operation of large-scale AI models.

SEOUL--(뉴스와이어)--FriendliAI released the serving system ‘Orca’ which dramatically enhances the serving efficiency of large-scale generative models at OSDI 2022. FriendliAI is a startup company that provides PeriFlow, a platform for developing large-scale AI models.

Orca is a serving system that enables the efficient operation of large-scale AI models. It can remove the inefficient delays of existing serving systems by using two core techniques: ‘iteration level scheduling’ and ‘selective batching.’

For understanding, imagine a group of friends that would like to ride a four-seated tandem bicycle along the Hudson River. Some want to bike for only 10 minutes, whereas others want a full hour. Under existing serving systems, once a bike ride has begun, all riders would be forced to bike until all passengers were satisfied (the longest target bike time on that team) and no other friends would be able to join until the former group was finished and returned to the starting point.

Orca solved this problem by using a ‘shuttle run’ system where the group returns to the starting point every 10 minutes, so riders can hop off individually soon after they satisfy their goals and late-comers can join the bike ride without a long wait. This shuttle run system corresponds to iteration-level scheduling. Additionally, Orca provides another technique, selective batching, to group the originally ungroupable riders before the biking starts.

With Orca, large-scale models can perform their generative tasks more than tens of times faster than existing serving systems (with GPT-3 175B). Besides, the cost of using large-scale models like GPT-3 is a hundredth smaller with Orca. The challenges of using large-scale models fade away with the new serving system. Orca can serve these models to a much wider range of users through a new level of accessibility.

The research on Orca, ‘Orca: A Distributed Serving System for Transformer-Based Generative Models’, was presented to OSDI 2022 (16th USENIX Symposium on Operating Systems Design and Implementation) on July 12th, which is a top-notch conference in the field of Computer Systems. Orca is already being used in production.

“Not only is it important to acquire data to improve model learning, but maximizing the efficiency of the serving system itself allows users to make use of large-scale generative models like OpenAI’s GPT-3,” said Byung-Gon Chun, the CEO of FriendliAI. “I expect this research will increase opportunities to use large-scale models on a variety of products.”

About FriendliAI

FriendliAI, which presented the Orca, is the startup providing the platform PeriFlow that makes large-scale AI development convenient for everyone. PeriFlow is a cloud-based platform automating the whole process of AI development from training to inference and deploying the service. Trained on PeriFlow, FriendliAI open-sourced ‘GPT-FAI 13B’, a large-scale language model using 13 billion parameters. Corresponding Author Byung-Gon Chun got his Ph.D. in the Computer Science department of the University of California, Berkley. He is interested in building services to make large-scale AI simple. Chun has won the Hall-of-fame award of ACM SIGOPS in 2020 and the EuroSys Test of Time Award in 2021. He has also received research awards from Google, Amazon, Facebook, and Microsoft.